1st Global AI Safety Summit 2023: Background and Purpose

The AI Safety Summit 2023, hosted at Bletchley Park, Buckinghamshire, on November 1 and 2, marked a significant global event driven by the vision of British Prime Minister Rishi Sunak. This summit sought to position the UK as a post-Brexit intermediary between economic blocs like the United States, China, and the EU.

The central focus was to assess the risks associated with cutting-edge AI models and formulate strategies for policymakers and stakeholders to enhance AI safety for the public good. Importantly, this event is just the beginning, as two more summits are scheduled for the following year in South Korea and France.

International Collaboration

Michelle Donelan, the British digital minister, emphasized the remarkable achievement of gathering key players in the AI field under one roof. She announced two upcoming AI Safety Summits, one in South Korea and another in France, signifying a global commitment to addressing AI safety concerns.

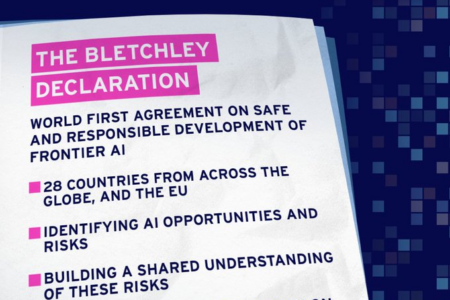

Over 25 countries, including the United States, China, and the EU, participated and jointly endorsed the “Bletchley Declaration,” which underscores the necessity for collaborative efforts and the establishment of a shared oversight framework. This declaration sets a dual agenda: identifying common AI risks and fostering a deeper scientific understanding of these risks, while simultaneously developing cross-country policies to mitigate them.

China’s Participation

The presence of China at the summit was significant, given its pivotal role in AI development. Nevertheless, concerns were raised by British lawmakers regarding China’s involvement in technology, considering the strained trust between Beijing, Washington, and many European capitals.

Key Outcomes and Highlights

1. The Bletchley Declaration A primary focus of the AI Safety Summit was to establish global coordination and standards in the realm of AI safety. This led to the historic signing of the “Bletchley Declaration” by 28 countries, including global heavyweights like the US, UK, and China. This declaration outlines plans for increased transparency from AI developers concerning safety practices and encourages scientific collaboration to better understand AI’s potential risks. Although the declaration lacks specific details, it represents a significant first step toward creating international norms and strategies to mitigate the inherent dangers of AI.

2. Elon Musk’s Warnings Renowned entrepreneur Elon Musk, CEO of Tesla and SpaceX, reiterated his concerns about AI at the summit. He emphasized the existential threats posed by advanced AI, characterizing it as “one of the biggest threats to humanity” due to its potential to surpass human intelligence.

3. UK’s Investment in AI Supercomputing The UK government announced a substantial investment of £225 million in a cutting-edge supercomputer known as Isambard-AI, to be constructed at the University of Bristol. Isambard-AI is expected to drive breakthroughs in various domains, including healthcare, energy, and climate modeling. In conjunction with another supercomputer named Dawn, this investment underscores the UK’s commitment to leading in AI while collaborating with global partners like the US. These supercomputers are set to become operational next summer.

4. Global AI Dominance and Competition The AI Safety Summit shed light on the intense global competition for AI dominance, with major players such as the US, EU, and China vying to set the rules and standards for AI in alignment with their economic and political objectives. While the summit emphasized cooperation and safety, it was clear that each region is actively participating in a high-stakes technological arms race to secure a leading position in the AI landscape.

Find More News related to Summits and Conferences

PM Modi Unveils India AI Impact Expo 202...

PM Modi Unveils India AI Impact Expo 202...

India To Hosts First-Ever Global South A...

India To Hosts First-Ever Global South A...

Bharat Bodhan AI Conclave 2026 Kicks Off...

Bharat Bodhan AI Conclave 2026 Kicks Off...