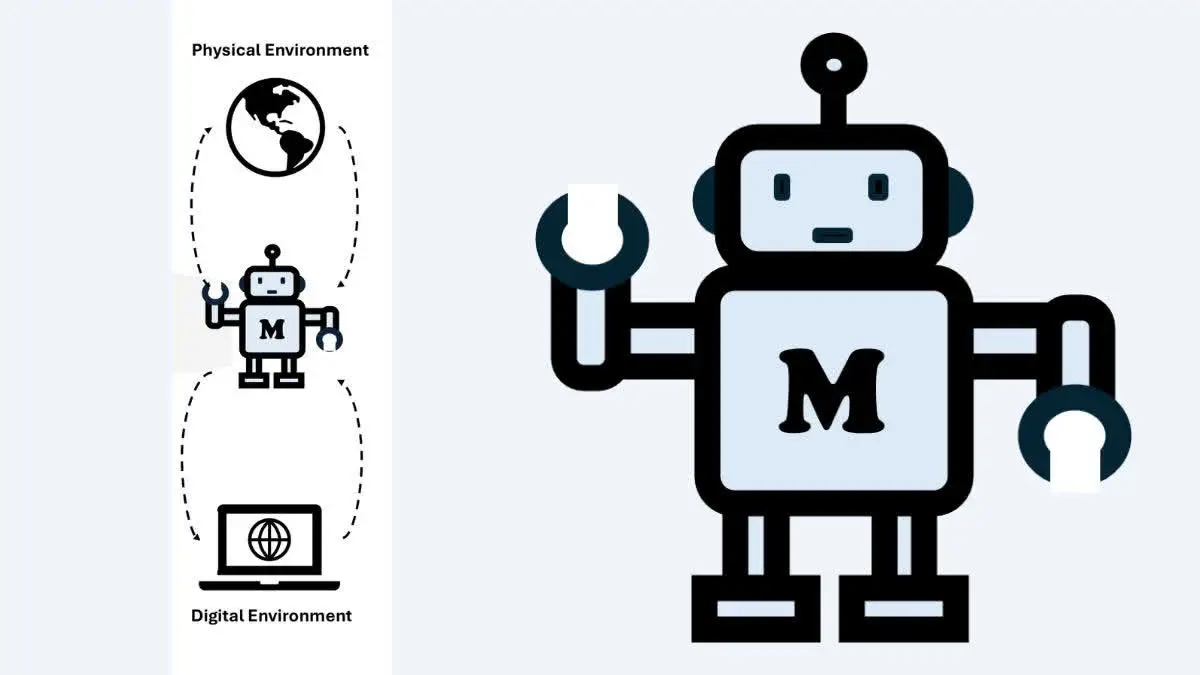

Microsoft has introduced Magma, a groundbreaking multimodal AI model capable of understanding both images and language in digital and physical environments. This revolutionary AI model can read, interpret, and act upon real-world tasks, such as navigating applications and controlling robotic movements.

Developed by researchers from Microsoft Research, the University of Maryland, the University of Wisconsin-Madison, KAIST, and the University of Washington, Magma is the first foundation model capable of interpreting and grounding multimodal inputs within its surroundings.

What Makes Magma Unique?

Magma is a step ahead of traditional vision-language (VL) models by integrating both verbal and spatial intelligence. While existing VL models focus primarily on image-text understanding, Magma extends these capabilities to include planning and real-world action execution. This enables it to perform UI navigation and robotic manipulation with a higher degree of precision than previous models.

Key Features of Magma:

- Multimodal AI: Processes and understands both visual and linguistic data.

- Spatial Intelligence: Plans and executes actions in real-world scenarios.

- Robotic Manipulation: Controls and guides robots with enhanced precision.

- UI Navigation: Can interact with digital interfaces by recognizing clickable buttons and actionable elements.

- State-of-the-art Accuracy: Outperforms existing AI models in real-world tasks.

The Evolution of Vision-Language Models

From Vision-Language Models to Multimodal AI

Traditional VL models focus on image-text pairing but lack the ability to understand spatial relationships and take action. Magma advances this by introducing spatial intelligence, allowing it to predict movements, track objects, and execute commands based on both textual and visual inputs.

Understanding Magma’s Dual Intelligence:

- Verbal Intelligence – Retains traditional vision-language comprehension, allowing it to process text and images effectively.

- Spatial Intelligence – Enables the model to plan, navigate, and manipulate objects in physical and digital spaces.

How Magma Was Trained

Magma’s training process involved large-scale multimodal datasets, consisting of:

- Images – Recognizing and labeling clickable items.

- Videos – Understanding object movements and interactions.

- Robotics Data – Learning real-world motion patterns for robotic control.

Researchers used two key labeling techniques to enhance Magma’s training:

- Set-of-Mark (SoM): Used for grounding clickable elements in UI interfaces.

- Trace-of-Mark (ToM): Applied for tracking object movements in videos and robotics.

By utilizing SoM and ToM, Magma developed a strong understanding of spatial relationships, allowing it to predict and perform a variety of actions with minimal supervision.

Capabilities of Magma in Real-world Applications

1. UI Navigation

Magma has demonstrated exceptional performance in navigating user interfaces. Some tasks it successfully completed include:

- Checking the weather in a specific city.

- Enabling flight mode on a smartphone.

- Sharing files via digital platforms.

- Sending text messages to a designated contact.

2. Robotic Manipulation

Magma outperformed OpenVLA (finetuning) in robotic control tasks such as:

- Soft object manipulation (handling delicate items with precision).

- Pick-and-place operations (grabbing and relocating objects).

- Generalization tasks on real robots (adapting to new environments and tasks).

3. Spatial Reasoning and Real-World Action Execution

Magma surpasses GPT-4o in spatial reasoning tasks, despite using significantly less training data. Its ability to predict future states and execute movements makes it a powerful tool for robotics and interactive AI applications.

4. Multimodal Understanding

Magma performed exceptionally well in video comprehension tasks, outperforming leading models such as:

- Video-LLaMA2

- ShareGPT4Video

Despite utilizing less video instruction tuning data, Magma achieved superior results in video-based AI benchmarks, proving its efficiency in handling multimodal tasks.

Future Implications of Magma in AI and Robotics

Magma’s capabilities open the door to new possibilities in AI-driven automation and robotics. Its ability to interact with digital interfaces and control robotic devices makes it a prime candidate for:

- AI-powered assistants capable of performing real-world tasks.

- Smart home automation with AI-driven robotic control.

- Healthcare robotics for patient assistance.

- Autonomous navigation systems for industrial applications.

As Magma continues to evolve, it is expected to redefine AI-driven automation by providing a seamless integration of verbal, spatial, and action-based intelligence.

Summary of the News

| Aspect | Details |

|---|---|

| Why in News? | Microsoft introduced Magma, a multimodal AI model that understands images and language for real-world tasks. |

| Developed By | Microsoft Research, University of Maryland, University of Wisconsin-Madison, KAIST, and University of Washington. |

| What Makes Magma Unique? | – Integrates verbal and spatial intelligence. – Executes real-world actions beyond traditional vision-language models. |

| Key Features | – Multimodal AI: Processes visual and linguistic data. – Spatial Intelligence: Plans and executes real-world tasks. – Robotic Manipulation: Controls robots with high precision. – UI Navigation: Recognizes and interacts with digital interfaces. – State-of-the-art Accuracy: Outperforms existing models in real-world tasks. |

| How Magma Was Trained? | – Dataset: Large-scale multimodal data (images, videos, robotics data). – Techniques Used: Set-of-Mark (SoM) for UI navigation & Trace-of-Mark (ToM) for tracking object movements. |

| Real-world Applications | – UI Navigation: Checking weather, enabling flight mode, sharing files, sending texts. – Robotic Manipulation: Soft object handling, pick-and-place, adapting to new tasks. – Spatial Reasoning: Predicts future states and executes movements. – Multimodal Understanding: Outperforms leading models in video comprehension tasks. |

| Future Implications | – AI-powered assistants for real-world tasks. – Smart home automation. – Healthcare robotics for patient assistance. – Autonomous navigation for industrial applications. |

Sarvam AI Startup Program Launched to Bo...

Sarvam AI Startup Program Launched to Bo...

IBM Launches First Infrastructure Innova...

IBM Launches First Infrastructure Innova...

Kolli Hills Gets Tamil Nadu’s First Dark...

Kolli Hills Gets Tamil Nadu’s First Dark...